Technology

Testing Network Resilience: Simulating Internet Outages for Learning

In an innovative approach to enhancing network resilience, a tech enthusiast recently simulated internet outages in a home lab using Linux tools. The experiment aimed to evaluate recovery procedures, alert systems, and overall network reliability. While the initiative began as a straightforward task, it quickly evolved into a complex exploration of network behavior under stress, revealing valuable insights and challenges.

Understanding the Motivation Behind Simulations

The primary objective of simulating internet outages was to create a controlled environment where the effects of connectivity loss could be observed. Early findings highlighted a critical issue: the DNS server emerged as a single point of failure, impacting various components across the network. By deliberately inducing failures, the individual aimed to develop more effective recovery strategies and improve overall system robustness.

Another key motivation was to practice troubleshooting under pressure. Unexpected outages can lead to forgotten protocols and inefficient responses. By running these simulations, the tech enthusiast could refine scripts, enhance log analysis, and improve monitoring tools while minimizing the risk of permanent damage. This hands-on experience accelerated response times and clarified documentation processes.

A sense of curiosity also played a significant role. By testing scenarios such as slow DNS resolution or intermittent packet loss, the individual uncovered insights that would not have been apparent during complete outages. These smaller degradations provided information about how sensitive the services were to instability, allowing for fine-tuning aimed at increasing reliability.

Setting Up the Simulation Environment

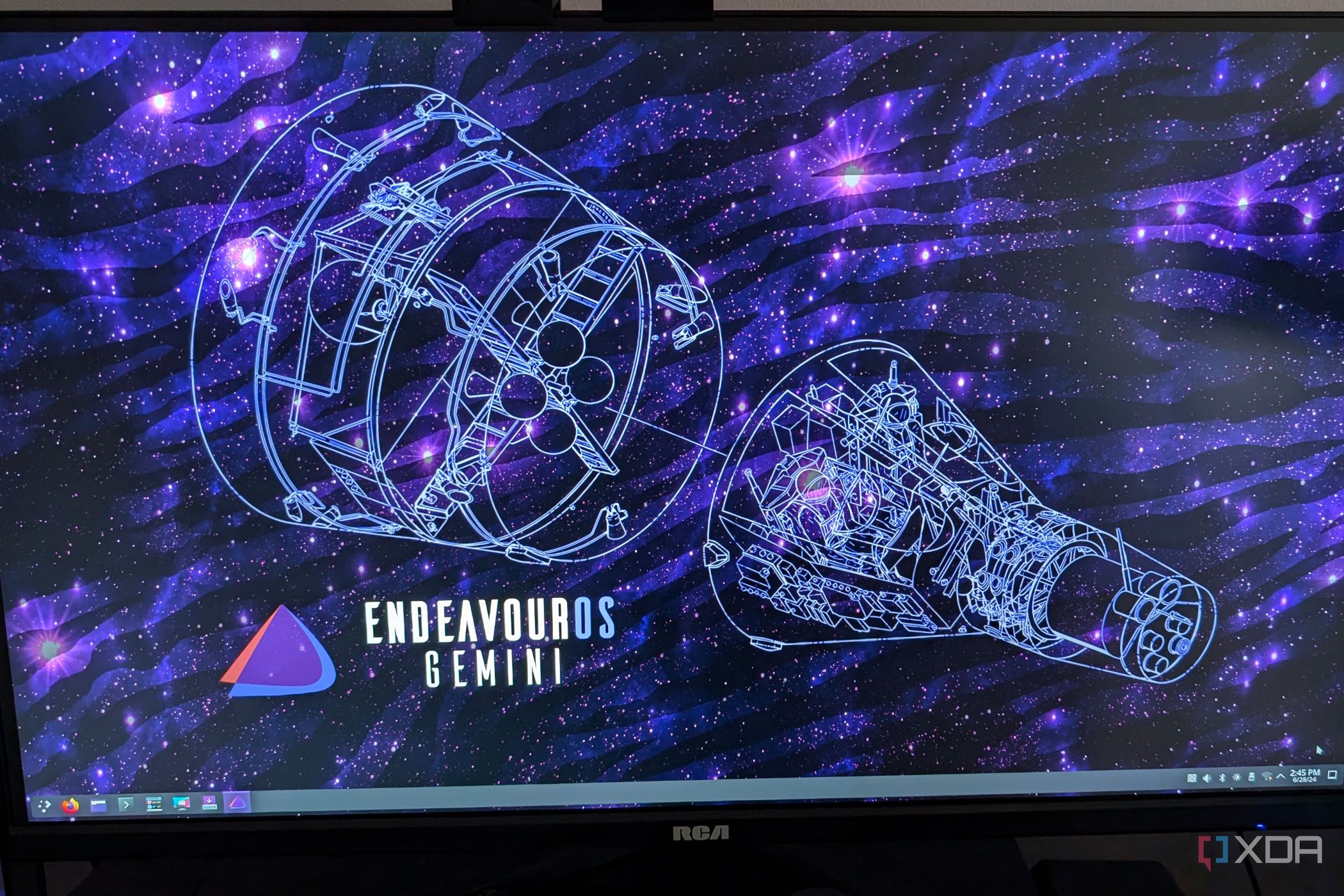

The groundwork for the simulated outages relied heavily on basic Linux utilities. The command tc was employed to introduce artificial latency and packet loss, while iptables and nftables were utilized for selective traffic blocking. This combination offered the ability to create a variety of conditions, from high latency to total disconnection, all without adding new dependencies to the system.

Network namespaces were instrumental in isolating experiments. By creating virtual environments, the individual could execute multiple failure scenarios simultaneously without affecting the entire network. If a problem arose, deleting a namespace quickly restored connectivity, making the process efficient and manageable.

To evaluate application-level responses, lightweight containers were set up to simulate upstream services. Some containers responded with delays, while others refused connections altogether. These mock services allowed for the examination of retry behaviors and fault tolerance under realistic conditions. Comprehensive logging and cleanup scripts ensured that each session remained consistent and free from lingering settings.

Challenges Encountered and Lessons Learned

Despite careful planning, the experiments did not proceed without complications. One significant challenge involved persistent kernel states that occasionally left behind partial rules, inadvertently blocking legitimate traffic. This issue led to frustrating downtime until an automated teardown process was established, which ultimately enhanced predictability in future tests.

Another unexpected outcome was the discovery of how some services struggled with temporary failures. When connectivity was restored, retry storms overwhelmed upstream systems, exacerbating the outages that were meant to be mitigated. Adjustments to retry intervals and the incorporation of jitter in backoff algorithms effectively spread out reconnect attempts, alleviating unnecessary load during recovery.

Additionally, the monitoring and alerting systems required significant adjustments. Some alerts failed to activate, while others generated excessive irrelevant warnings. By refining alert rules to trigger after sustained failures and categorizing them by probable root cause, the individual was able to focus on meaningful indicators rather than merely responding to final symptoms.

Weighing Risks and Safer Alternatives

While the benefits of simulating failures are evident, there are compelling reasons why many administrators exercise caution. Conducting chaos experiments in live networks poses risks of data loss and disruption for users. Vendors typically advocate for formal chaos engineering frameworks that include strict controls, rollback mechanisms, and monitoring safeguards to ensure tests are deliberate and reversible.

Some professionals contend that lab results cannot fully replicate production behavior. Real networks feature unpredictable traffic patterns, hardware idiosyncrasies, and concurrent workloads that can complicate modeling. The experience gained from simulations is invaluable but should be viewed as training rather than validation. For home lab operators, maintaining a clear boundary between safe testing and reckless experimentation is crucial.

Best Practices for Safe Testing

To conduct effective simulations without jeopardizing network stability, several best practices should be followed. First, clearly define the scope of tests to ensure that only controlled systems are affected. Automating setup and teardown processes minimizes human error and enhances consistency. Starting with mock environments rather than live services is advisable, as is configuring retry mechanisms before testing to avoid storm effects.

Documentation is essential for tracking test outcomes, noting failures, and recording resolutions for future reference. By implementing these structured practices, chaotic experiments can transform into valuable drills that foster resilience and reliability within networks.

The command tc remains a powerful tool for simulating latency and packet loss, while network namespaces provide safe isolation for failures. Utilizing dnsmasq for DNS failures and small HTTP containers for mock services covers a wide array of potential failure modes. Tracking key metrics throughout the tests—including alert response times and recovery durations—transforms each simulation from an arbitrary exercise into a measurable opportunity for improvement.

Conclusion: Insights from Network Simulations

The experience of simulating outages in a home lab has underscored the fragility of network systems. These controlled failures have illuminated hidden dependencies, unreliable retry logic, and vulnerabilities in alerting setups. Each experiment has led to tangible improvements in system architecture and maintenance practices. Although reckless testing is not advisable, a thoughtful, measured approach can significantly enhance network resilience.

-

Top Stories1 month ago

Top Stories1 month agoRachel Campos-Duffy Exits FOX Noticias; Andrea Linares Steps In

-

Top Stories1 week ago

Top Stories1 week agoPiper Rockelle Shatters Record with $2.3M First Day on OnlyFans

-

Top Stories6 days ago

Top Stories6 days agoMeta’s 2026 AI Policy Sparks Outrage Over Privacy Concerns

-

Sports5 days ago

Sports5 days agoLeon Goretzka Considers Barcelona Move as Transfer Window Approaches

-

Top Stories1 week ago

Top Stories1 week agoUrgent Update: Denver Fire Forces Mass Evacuations, 100+ Firefighters Battling Blaze

-

Top Stories1 week ago

Top Stories1 week agoOnlyFans Creator Lily Phillips Reconnects with Faith in Rebaptism

-

Entertainment5 days ago

Entertainment5 days agoTom Brady Signals Disinterest in Alix Earle Over Privacy Concerns

-

Top Stories7 days ago

Top Stories7 days agoOregon Pilot and Three Niece Die in Arizona Helicopter Crash

-

Top Stories5 days ago

Top Stories5 days agoWarnock Joins Buddhist Monks on Urgent 2,300-Mile Peace Walk

-

Health2 months ago

Health2 months agoTerry Bradshaw Updates Fans on Health After Absence from FOX NFL Sunday

-

Top Stories4 days ago

Top Stories4 days agoCBS Officially Renames Yellowstone Spin-off to Marshals

-

Sports3 days ago

Sports3 days agoSouth Carolina Faces Arkansas in Key Women’s Basketball Clash