Regulators Face Challenges in Overseeing AI Mental Health Apps

As the demand for mental health support grows, regulators across the United States are struggling to keep pace with the proliferation of AI therapy apps. In the absence of comprehensive federal regulations, states have begun implementing their own laws aimed at governing these technologies. However, experts warn that the resulting patchwork of regulations fails to adequately protect users or hold developers accountable for potentially harmful applications.

Karin Andrea Stephan, CEO and co-founder of the mental health chatbot app Earkick, expressed concern about the current state of regulation. “The reality is millions of people are using these tools and they’re not going back,” she said. This surge in usage comes amid a notable shortage of mental health professionals, high treatment costs, and uneven access to care.

State Regulations and Their Impact

Several states have taken action this year, but their approaches vary significantly. Illinois and Nevada have banned the use of AI for mental health treatment entirely, imposing fines of up to $10,000 in Illinois and $15,000 in Nevada for violations. Conversely, Utah has implemented specific limits on therapy chatbots, such as requiring them to protect user health information and disclose that they are not human.

While some apps have restricted access in states with bans, others have opted to maintain their services while awaiting clearer legal guidelines. Many of these regulations notably exclude widely used general chatbots like ChatGPT, which are not explicitly marketed for therapy yet are often utilized for that purpose. In tragic cases, users have faced severe consequences after interacting with such bots, leading to lawsuits and mental health crises.

Vaile Wright, who oversees health care innovation at the American Psychological Association, acknowledged the potential benefits of well-designed mental health chatbots. She noted that if these tools were grounded in science and monitored by humans, they could serve as valuable resources. “This could be something that helps people before they get to crisis,” Wright stated, emphasizing the need for federal oversight to ensure safety and efficacy.

On November 6, 2023, the Federal Trade Commission (FTC) announced inquiries into seven AI chatbot companies, including major players like Google and the parent companies of Instagram and Facebook. These inquiries aim to assess how these companies evaluate and monitor the potential negative impacts of their technologies on children and teenagers.

The Need for Comprehensive Oversight

With the rapid evolution of AI technology, the challenge of defining and regulating mental health apps becomes increasingly complex. Some states are focusing on companion apps designed for social interaction rather than therapeutic purposes. The inconsistency in regulatory measures has left many developers like Earkick’s Stephan grappling with unclear legal frameworks. She described the situation as “very muddy,” particularly in Illinois, where her company has not restricted access despite the ban.

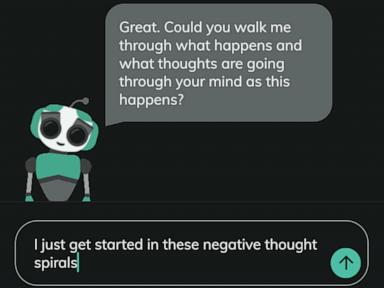

In an example of the evolving language surrounding these apps, Earkick initially hesitated to label its chatbot as a therapist but later adopted the term based on user feedback. Currently, the app promotes itself as a “chatbot for self-care,” emphasizing that it does not diagnose conditions or serve as a suicide prevention tool. Users can set up a “panic button” to contact a trusted person if they are in crisis, but police involvement is not part of the protocol.

The Illinois Department of Financial and Professional Regulation, led by Secretary Mario Treto Jr., aims to ensure that only licensed therapists provide therapy, citing the need for empathy and clinical judgment—qualities that AI cannot replicate effectively. Treto stated, “Therapy is more than just word exchanges. It requires empathy, it requires clinical judgment, it requires ethical responsibility.”

While some companies have taken immediate action in response to state regulations, others continue to advocate for clearer guidelines. The AI therapy app Ash, for instance, has encouraged users to contact their legislators, arguing that “misguided legislation” is hindering access to beneficial services while leaving unregulated chatbots free to cause harm.

Earlier this year, a team from Dartmouth University published the first known randomized clinical trial of a generative AI chatbot designed for mental health treatment. The chatbot, named Therabot, was developed to assist individuals diagnosed with anxiety, depression, or eating disorders. Preliminary results indicated that users reported improvements comparable to those achieved through traditional therapy, with meaningful reductions in symptoms over eight weeks.

Clinical psychologist Nicholas Jacobson, who leads the research on Therabot, cautioned against strict bans on AI applications. “The space is so dramatically new that I think the field needs to proceed with much greater caution than is happening right now,” he noted, emphasizing the importance of allowing developers to create safe and effective tools.

As the landscape of AI therapy continues to evolve, advocates and regulators acknowledge the need for a balanced approach that prioritizes user safety while fostering innovation. The current chatbots may not address the urgent demand for mental health services, but they present an opportunity for further development. Kyle Hillman, who lobbied for regulations in Illinois and Nevada, pointed out that not everyone experiencing sadness requires a therapist. However, offering a chatbot as a substitute for those with serious mental health issues is a troubling proposition.

In light of these challenges, the ongoing dialogue among regulators, developers, and mental health advocates will be crucial in shaping the future of AI in mental health care, ensuring that users receive safe and effective support.