Unlocking Insights: The Power of Multi-Modal Data Analysis

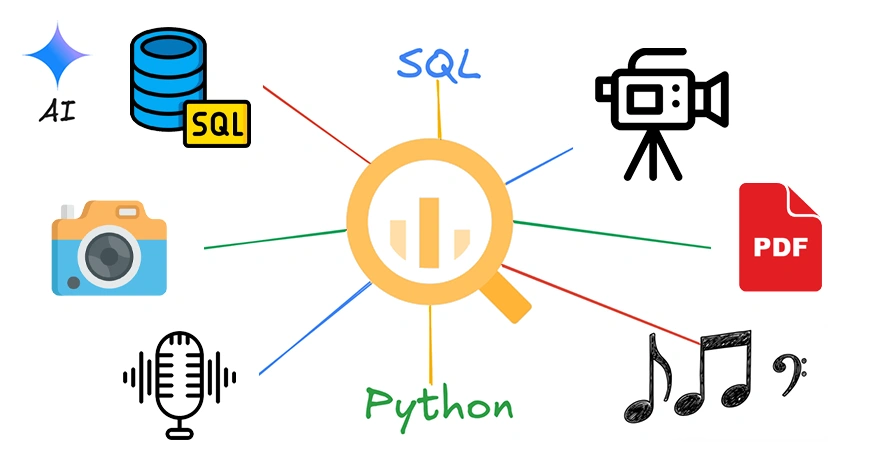

The emergence of **multi-modal data analysis** is transforming how organizations interpret complex datasets. By integrating different types of data—such as text, images, audio, and numerical information—this approach enhances understanding and improves prediction accuracy. Unlike traditional single-modal methods, which often overlook critical interconnections, multi-modal data analytics enables a more comprehensive view of issues by revealing intricate relationships across diverse data sources.

Understanding Multi-Modal Data

Multi-modal data refers to the integration of two or more data types that may include text, images, audio, video, and sensor information. For instance, a social media post can combine both text and images, while a medical record may include clinician notes, x-rays, and vital sign measurements. Analyzing such multifaceted data requires specialized techniques capable of modeling the interdependence of various data types.

The rise of **multi-modal machine learning** has made it essential to analyze structured and unstructured data in tandem. This approach not only enhances accuracy but also uncovers hidden relationships, making it particularly valuable in fields like **autonomous driving**, healthcare diagnostics, and recommender systems.

Techniques in Multi-Modal Data Analysis

Multi-modal data analysis employs various methods to explore and interpret datasets. The primary challenge lies in efficiently fusing and aligning information from different modalities. Analysts must navigate diverse data structures, scales, and formats to extract meaningful insights.

Modern advances in machine learning, particularly through deep learning models, have significantly improved multi-modal analysis capabilities. Techniques such as **attention mechanisms** and **transformer models** enable detailed learning of cross-modal relationships.

Data preprocessing is crucial for effective analysis. This involves converting data into numerical representations that retain essential characteristics while allowing for comparability across modalities. For example, text data might be transformed into vectors using techniques like **TF-IDF** or **BERT**, while images can be analyzed using pre-trained **Convolutional Neural Networks (CNNs)** like **ResNet** or **VGG**.

Feature extraction involves identifying important characteristics from the data, which simplifies the modeling process. Each modality requires specific techniques; text processing may include tokenization and semantic embedding, whereas image analysis employs convolutional techniques to detect visual patterns.

Fusion Techniques for Multi-Modal Data

Fusion techniques are essential for combining multi-modal data effectively. Three primary strategies are employed: early fusion, late fusion, and intermediate fusion.

**Early fusion** integrates data from multiple sources at the feature level before processing begins. This method is particularly effective when modalities exhibit common patterns. Conversely, **late fusion** processes each modality independently before combining results, allowing flexibility and easier integration of single-modal models without significant architectural changes.

**Intermediate fusion** combines modalities at various processing levels, balancing the strengths of both early and late fusion methods. This approach optimizes metrics and computational constraints, making it suitable for complex real-world applications.

The process of multi-modal data analysis culminates in enhanced insights. By analyzing correlations among diverse data types, organizations can uncover intricate relationships and dependencies that would remain hidden in single-modal analysis.

The benefits of this approach extend to increased performance, faster time-to-insights, and scalability. By allowing for the combination of multiple data types, organizations can generate deeper insights and maintain robust analytical systems, even in the presence of missing or noisy data.

As organizations increasingly adopt multi-modal data analytics, they gain substantial competitive advantages. However, success relies on strategic investments in infrastructure and governance frameworks. With automated tools and cloud platforms facilitating access, early adopters are poised to excel in a data-driven economy.

In summary, multi-modal data analysis represents a revolutionary advancement in data interpretation. By leveraging diverse information sources, organizations can unlock unparalleled insights, paving the way for a deeper understanding of complex relationships that traditional approaches may overlook.