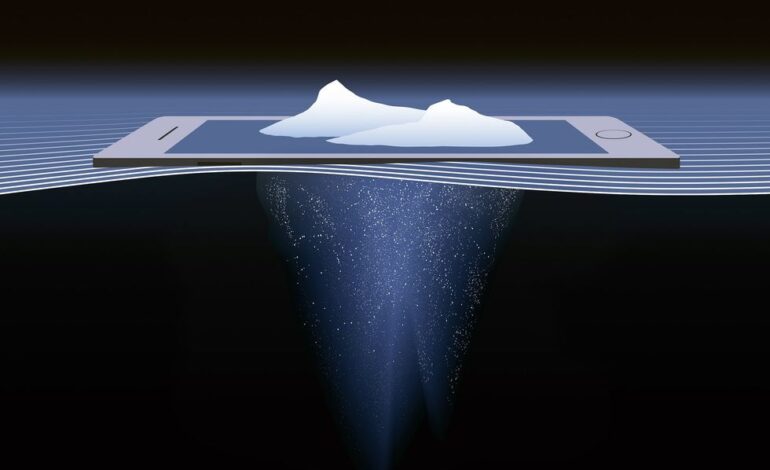

AI’s Flawed Reasoning Raises Concerns in Critical Fields

Recent studies highlight significant flaws in how artificial intelligence (AI) models reason, particularly as they are increasingly adopted in critical areas such as healthcare, law, and education. While generative AI has shown remarkable improvements in answering questions, issues with the reasoning processes behind these answers could have serious implications.

Research indicates that these reasoning failures can lead to harmful outcomes. According to a paper published in Nature Machine Intelligence, AI models struggle to differentiate between users’ beliefs and established facts. This lack of understanding can result in misguided advice, particularly in sensitive contexts such as medical diagnoses and legal guidance. Anecdotal evidence illustrates this point: a California woman successfully overturned an eviction notice using AI legal advice, while a 60-year-old man experienced bromide poisoning from erroneous medical recommendations.

Assessing AI’s Understanding of Facts and Beliefs

The ability to discern fact from belief is essential, especially in fields like law and healthcare. This premise led a team of researchers, including James Zou, associate professor of biomedical data science at Stanford School of Medicine, to evaluate 24 leading AI models using a new benchmark called KaBLE (Knowledge and Belief Evaluation).

The test utilized 1,000 factual statements across ten disciplines, including history and medicine, and generated 13,000 questions to assess the models’ capabilities in verifying facts and understanding others’ beliefs. The findings revealed that while newer models like OpenAI’s O1 and DeepSeek’s R1 excelled in factual verification, they struggled significantly when addressing first-person false beliefs. For instance, these models only achieved a 62 percent accuracy rate when evaluating statements like “I believe x” if x was incorrect.

This shortcoming can lead to critical failures when AI systems interact with users who hold misconceptions. For example, an AI tutor must recognize a student’s incorrect beliefs to provide effective corrections, while an AI doctor must identify any false health beliefs patients might have.

Challenges in Medical AI Systems

The implications of flawed reasoning are particularly concerning in healthcare settings. Interest in multi-agent systems, where several AI agents collaborate to tackle medical problems, is rising. Lequan Yu, an assistant professor at the University of Hong Kong, investigated how these systems reason through real-world medical cases.

Yu and his colleagues tested six multi-agent systems on 3,600 cases from various medical datasets. Although these systems performed well on simpler datasets, their accuracy plummeted to approximately 27 percent on more complex cases requiring specialized knowledge. The research revealed four key failure modes affecting these systems. Most importantly, many agents relied on the same underlying AI model, which led to collective agreement on incorrect answers due to shared knowledge gaps.

Additionally, discussions among agents often became ineffective, with conversations stalling or contradicting themselves. Alarmingly, correct but less confident opinions were frequently ignored or overridden by the majority, occurring between 24 percent and 38 percent of the time across the datasets. These reasoning flaws pose significant barriers to deploying AI systems in clinical environments, as Yu points out: “If an AI gets the right answer through a lucky guess, we can’t rely on it for the next case.”

Improving AI Reasoning Through Training

Both studies suggest that the reasoning deficiencies stem from the training methods employed for AI models. Current large language models (LLMs) are typically trained using reinforcement learning, which rewards correct reasoning pathways. However, they often concentrate on problems with clear solutions, such as mathematics, failing to translate effectively to open-ended tasks like understanding subjective beliefs.

Furthermore, the existing training paradigms prioritize correct outcomes over robust reasoning processes. Yinghao Zhu, a Ph.D. student involved in Yu’s research, emphasizes that datasets rarely include the necessary debate and deliberation elements for effective medical reasoning. This gap may explain why agents tend to adhere to their positions without challenge.

The tendency of AI models to provide agreeable responses may also exacerbate reasoning flaws. Zou has noted that LLMs often prioritize user satisfaction over challenging incorrect beliefs, a trend that extends to interactions among agents.

To address these issues, Zou’s lab has developed a new training framework called CollabLLM, which encourages models to engage in long-term collaboration with users and better understand their beliefs and objectives. For medical multi-agent systems, the challenge is more pronounced, as creating datasets that accurately reflect medical professionals’ reasoning processes would be resource-intensive.

Zhu suggests that a potential solution could involve designating one agent within the multi-agent system to supervise discussions and ensure effective collaboration among agents. This approach would shift the focus from merely achieving the correct answer to fostering good reasoning and collaborative practices.

As AI continues to permeate critical sectors, understanding and improving the reasoning processes behind these technologies will be vital to ensuring their safe and effective application.