Local LLM and NotebookLM Integration Transforms Research Workflow

Integrating a local Large Language Model (LLM) with NotebookLM has markedly improved the research workflow for users tackling complex projects. This innovative approach combines the organizational strengths of NotebookLM with the speed and customization offered by local LLMs, leading to substantial productivity gains.

Enhancing Research Efficiency

Many professionals find the digital research process challenging. While tools like NotebookLM excel at organizing research and generating insights based on user-uploaded documents, they often lack the speed and control that a local LLM can provide. This combination of strengths creates a powerful hybrid workflow.

By leveraging a local LLM, users can quickly gather and structure information on broad topics. For instance, when exploring the intricacies of self-hosting using Docker, the local LLM can generate a comprehensive overview in seconds. This overview typically includes essential security practices and networking fundamentals, thus laying a robust foundation for further research.

The next step involves transferring this structured information into NotebookLM. Once integrated, the local LLM overview becomes a source material, allowing users to utilize NotebookLM’s advanced features to enhance their productivity.

Realizing Major Productivity Gains

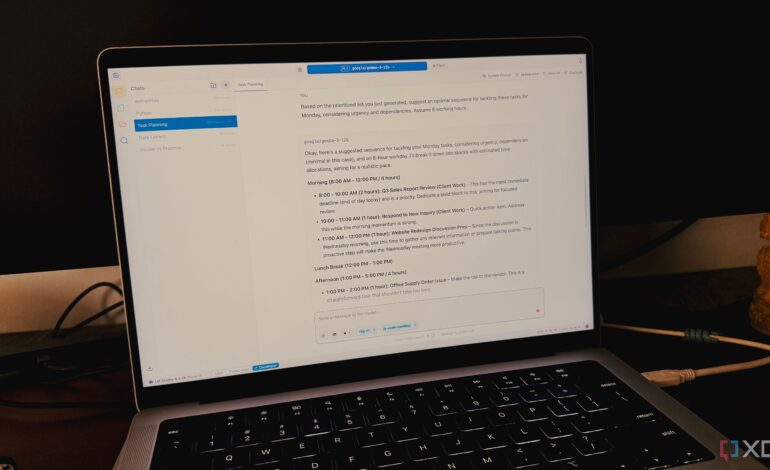

Once the initial overview is incorporated, users can pose specific questions to NotebookLM, such as, “What essential components are necessary for self-hosting applications using Docker?” The tool rapidly provides relevant and accurate answers, greatly reducing research time.

Another significant advantage of this integration is the ability to generate audio summaries. With both the structured overview and source material combined, users can create a personalized audio overview, enabling them to absorb information while away from their desks.

Furthermore, the citation feature within NotebookLM serves as a crucial resource. As users progress with their research, they can easily verify the origins of facts. For example, NotebookLM’s interface can pinpoint which part of the local LLM overview is supported by a specific paragraph in a blog post or which detail stems from a particular page in a PDF. This drastically cuts down the time spent on fact-checking and enhances the overall research accuracy.

In summary, this integration has revolutionized how complex projects are approached. Initially expected to yield only marginal improvements, the combination of a local LLM and NotebookLM has fundamentally transformed the research landscape. For those serious about maximizing productivity while retaining control over their data, this hybrid approach is set to redefine the research environment.

For further insights and tips on optimizing productivity with a local LLM, readers can refer to dedicated posts exploring various workloads that can be streamlined using this technology.