Exploring “Vibe Coding”: Risks of AI-Generated Programming

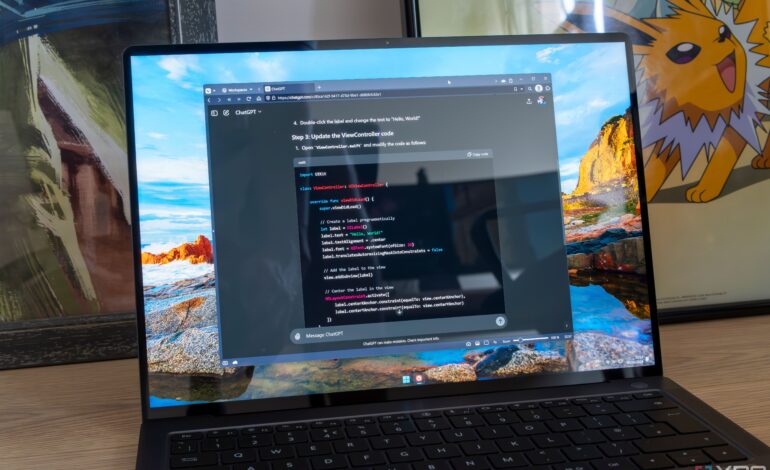

The rise of AI tools like ChatGPT has given birth to a new practice known as “vibe coding.” This term varies in interpretation among users, with some employing AI to generate entire programs without regard for code quality or security. Others leverage these tools to expedite tasks they could perform themselves. A recent exploration into the vulnerabilities of AI-generated code raises significant concerns about reliance on such technologies.

Having a background in programming, with studies in languages such as C, Java, Ruby, and Python, I decided to investigate the output of ChatGPT in generating code samples. My aim was to assess the vulnerabilities it produces, particularly given the potential dangers associated with poorly constructed code. During my analysis, I was alarmed by the findings, which reveal flaws that could have serious implications if deployed in real-world applications.

Vulnerabilities in AI-Generated Code

One notable example involved generating a program in C++ to collect system statistics and publish them to an MQTT topic, a common protocol for Internet of Things (IoT) devices. The initial code presented several issues, such as the lack of SSL enforcement and inadequate password handling. Credentials were transmitted in plaintext, exposing them to potential interception. This oversight is concerning, especially considering the absence of warnings from ChatGPT regarding these security flaws.

Additionally, the code exhibited a significant input validation vulnerability. Users could set the message interval to zero or less, potentially flooding the MQTT broker with excessive messages. This could overwhelm the broker and the device sending the data, resulting in a denial of service.

Another alarming aspect was the complete lack of input validation for the topic and payload parameters. This oversight could allow malicious users to exploit the system by publishing harmful data. For instance, publishing to sensitive reserved topics or executing commands that could manipulate system resources poses a substantial risk.

Comparative Analysis of Code Samples

Further analysis of a Python script for listing directory contents revealed additional vulnerabilities. While the script claimed to handle errors effectively, it inadvertently allowed for command injection through user input. The potential for an attacker to execute arbitrary commands underscores the necessity for robust security measures, especially when executing system commands.

In contrast, a C program designed to read CSV files highlighted the challenges of memory safety. Although the program managed basic memory allocation correctly, it failed to account for input lengths exceeding the buffer size, leading to potential overflow risks. Moreover, the code did not handle edge cases such as empty fields or quoted strings, which could lead to incorrect data processing.

A more complex example involved a C program hosting a basic web server for file uploads. While the code was generally functional, it presented serious vulnerabilities, including unbounded memory allocation for both headers and content. This could lead to resource exhaustion and buffer overflow, allowing unauthorized access to system memory.

The takeaway from these analyses is clear: while AI-generated code can serve as a useful tool for prototyping and testing, it must be approached with caution. Users should not rely solely on AI for programming tasks without a fundamental understanding of code quality and security.

AI should supplement a developer’s skills rather than replace them. As the landscape of programming evolves with the introduction of AI, it remains essential for programmers to remain vigilant and informed. The vulnerabilities identified in these examples underscore the importance of thorough code review and testing before deployment.

In conclusion, “vibe coding” can serve as a starting point for learning and experimentation, but it is imperative for users to engage critically with the code generated by AI. By understanding its limitations and potential pitfalls, developers can maximize the benefits of AI tools while minimizing the risks associated with their use.